“Responsible AI is built-in, not bolted on”

K R Jayakumar

1. Introduction

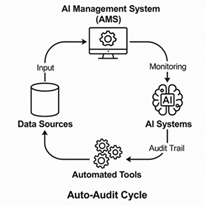

In today’s dynamic AI landscape, the need for robust, automated tools to ensure compliance with standards like ISO 42001 is more critical than ever. ISO 42001 is designed to enforce transparency, traceability, and accountability across all AI systems. This document outlines a comprehensive approach to implementing ISO 42001 through proven tools based on my initial understanding of the tools space for AI compliance and model evaluations.

2. The Need for Automated Tools in ISO 42001

ISO 42001 mandates the automation of AI governance for several compelling reasons:

- Inherent Differences from Traditional Systems: Traditional systems are often static once deployed, whereas AI systems—including machine learning models, large language models (LLMs), and AI agents—are dynamic. These systems require continuous oversight to track performance and respond to emergent issues such as model drift and unforeseen biases. Automated tools enable sustained monitoring and iterative improvement.

- Bias Detection, Explainability, and Reliability: Detecting bias, ensuring explainability, and maintaining reliability demand processing vast amounts of data with significant computing resources. Automated tools generate meaningful metrics that objectively measure fairness and system integrity.

- Dynamic Nature: Unlike traditional systems, AI-based systems continue to learn and adapt even after deployment. As data, environmental conditions, and regulatory requirements evolve, continuous monitoring via automated tools becomes indispensable to keep the system aligned with current norms.

- Scale Challenges: With a single LLM processing millions of prompts daily, manual audit methods are impractical. Automated tools provide the precision and speed required to ensure every decision is traceable and every metric accurately recorded.

- Regulatory Traceability: Detailed audit trails are a regulatory necessity. Automation guarantees that every aspect of an AI system—from data ingestion to model predictions—is fully documented and traceable for audits.

“Once you see how automation transforms mundane compliance into strategic insight, there’s no going back.”

3. Tools for ISO 42001: A Comprehensive Framework

To address the challenges posed by ISO 42001, my approach categorizes tools into five key segments:

3.1 Governance, Risk, Privacy & Security Management

- Purpose: Ensure robust, end-to-end governance covering risk assessment, privacy, and security.

- Notable Tools:

- IBM Watson Governance

- Credo AI

- Fiddler AI

- Splunk

You may notice that Governance, Risk, Privacy, and Security have been grouped into a single category. This consolidation reflects the significant overlap in functionality among tools in these domains, as many solutions address multiple aspects simultaneously.

3.2 AI Model Evaluation

- Purpose: Provide thorough evaluation of bias, fairness, explainability and performance for both LLMs and traditional machine learning models.

- Notable Tools:

- Fairlearn (Microsoft)

- IBM AIF360

- Weights & Biases

- Optik, Ragas, TrruLens

Note: Some tools support both LLM and traditional ML models, while a few are restricted to traditional ML only. At this point, specific support for Agentic AI has not been explored.

Read my blog for more details on LLM evaluation frameworks: “Are LLM Evaluation Frameworks the Missing Piece in Responsible AI?” https://wp.me/pfqMXl-2R

3.3 Documentation Management

- Purpose: Facilitate complete traceability and documentation required for audits and continual reference.

- Notable Tools:

- Confluence

- DocuWiki

Many organizations rely on tools like SharePoint, internal intranet platforms, or custom-built workflow systems for document review, approval, publication, and version management. These solutions can be effective, provided they incorporate strong document control measures, robust security protocols, and auditability features to ensure compliance and traceability

3.4 Incident Management

- Purpose: Enable rapid response to and resolution of any incidents or breaches in the AI system’s operations.

- Notable Tools:

- JIRA Service Management

- Splunk

A wide range of tracking tools—both open-source and commercial—can be configured to support incident management. Organizations have the flexibility to adopt existing solutions or develop custom tools, provided they incorporate the core principles of incident management, including structured workflows, automation, and real-time monitoring for effective resolution and auditability.

3.5 Continual Improvement

- Purpose: Ensure real-time oversight and data-driven enhancement of AI systems.

- Notable Tools:

- Grafana

- Tableau

Tools in this category primarily serve as data analytics solutions. Any data analytics tool equipped with strong visualization capabilities can effectively monitor key metrics, extract meaningful insights, and showcase improvements over time—making them well-suited for supporting continual improvement initiatives.

4. Key Tool Features and Comparative Analysis

One critical aspect of responsible AI governance is differentiating between tools that support large language models and those suited for traditional machine learning. The Table 1 outlines key features of various tools and categorizes their availability under threelicensing models:

- Free and Open-Source Software (FOSS): Completely free to use, with openly accessible source code for modification and distribution.

- Freemium: Provides free access with limitations, such as restricted features, usage caps, or a trial period, with full functionality available through paid upgrades.

- Commercial: Requires a paid subscription or license fee for access and use.

| Tool | Type | LLM Support | Traditional ML Support | Key Feature |

| Fairlearn | FOSS | No | Yes | Bias mitigation in classification/regression models |

| AI 360 | FOSS | No | Yes | Bias mitigation |

| Optik | FOSS | Yes | No | LLM evaluation framework |

| Ragas | FOSS | Yes | No | LLM evaluation framework |

| TrruLens | FOSS | Yes | No | LLM evaluation framework |

| MLflow | Freemium | Yes | Yes | Model versioning and fine-tuning logs |

| Great Expectations | Freemium | Yes | Yes | Data validation for AI training data |

| Weights & Biases | Freemium | Yes | Yes | Experiment tracking |

| IBM Watsonx.Governance | Paid | Yes | Yes | End-to-end AI governance |

| Credo AI | Paid | Yes | Yes | End-to-end AI governance |

| Fiddler AI | Paid | Yes | Yes | End-to-end AI governance |

Table 1: Comparative Features of Key AI Evaluation and Governance Tools.

5. Mapping ISO 42001 Clauses to Automated Tools

A practical roadmap for aligning with ISO 42001 involves mapping specific clauses to relevant tool categories. The table below illustrates this mapping:

| ISO 42001 Clause | Tool Category(s) |

| 4 Context of the organization | |

| 4.1 Understanding the organization and its context | AI Governance, Risk, Privacy & Security Management |

| 4.2 Understanding the needs and expectations of interested parties | AI Governance, Risk, Privacy & Security Management |

| 4.3 Determining the scope of Artificial Intelligence Management System | Documentation Management |

| 4.4 AI management system | Documentation Management |

| 5 Leadership | |

| 5.1 Leadership and commitment | Documentation Management |

| 5.2 AI Policy | Documentation Management, AI Governance, Risk, Privacy & Security Management |

| 5.3 Roles and responsibilities | Documentation Management |

| 6 Planning | |

| 6.1 Actions to address risks and opportunities | AI Governance, Risk, Privacy & Security Management |

| 6.2 AI objectives and planning to achieve them | AI Governance, Risk, Privacy & Security Management / Documentation Management |

| 6.3 Changes | Documentation Management |

| 7 Support | |

| 7.1 Resources | AI Model Evaluation, AI Governance, Risk, Privacy & Security Management |

| 7.2 Competence | Documentation Management |

| 7.3 Awareness | Documentation Management |

| 7.4 Communication | Documentation Management |

| 7.5 Documented information | Documentation Management |

| 8 Operation | |

| 8.1 Operational planning and control | Documentation Management |

| 8.2 AI Risk Assessment | AI Governance, Risk, Privacy & Security Management |

| 8.3 AI System Impact Assessment | AI Governance, Risk, Privacy & Security Management |

| 9. Performance Evaluation | AI Governance, Risk, Privacy & Security Management |

| 10. Improvement | Incident Management, Continual Improvement |

The mapping of tool categories to key ISO 42001 clauses offers a high-level perspective on selecting the most suitable automated tools for an organization’s requirements. Additionally, Annexures A through D of the ISO 42001 standard provide further insights, helping not only in tool selection but also in identifying typical inputs necessary for practical implementation of tools.

6. Conclusion and Call to Action

In the rapidly evolving realm of AI, ensuring robust, compliant, and responsible AI systems is not only an operational necessity—it is a moral imperative. By integrating automated tools for governance, evaluation, documentation, incident management, and continual improvement, organizations can build an AI management system that meets ISO 42001 standards.

While this document has focused primarily on automated tools for mainstream AI governance, it is important to note that specific Agentic AI considerations have not been fully explored here. Some of the tools mentioned also address the applicability of Agentic AI, which is critical in preventing AI agents from becoming rogue—a significant concern in today’s AI deployments. I plan to develop an updated version of this document as more insights into Agentic AI–specific tools emerge.

I invite all reader to share their experiences and insights with any of the tools. Let’s work together to ensure that this document evolves in step with the dynamic nature of the AI landscape, serving as an ever-improving resource for the community. By contributing to this evolving dialogue, we can set new benchmarks for responsibility, transparency, and innovation in AI.

“Transparency is the currency of trust in AI.” — Anonymous